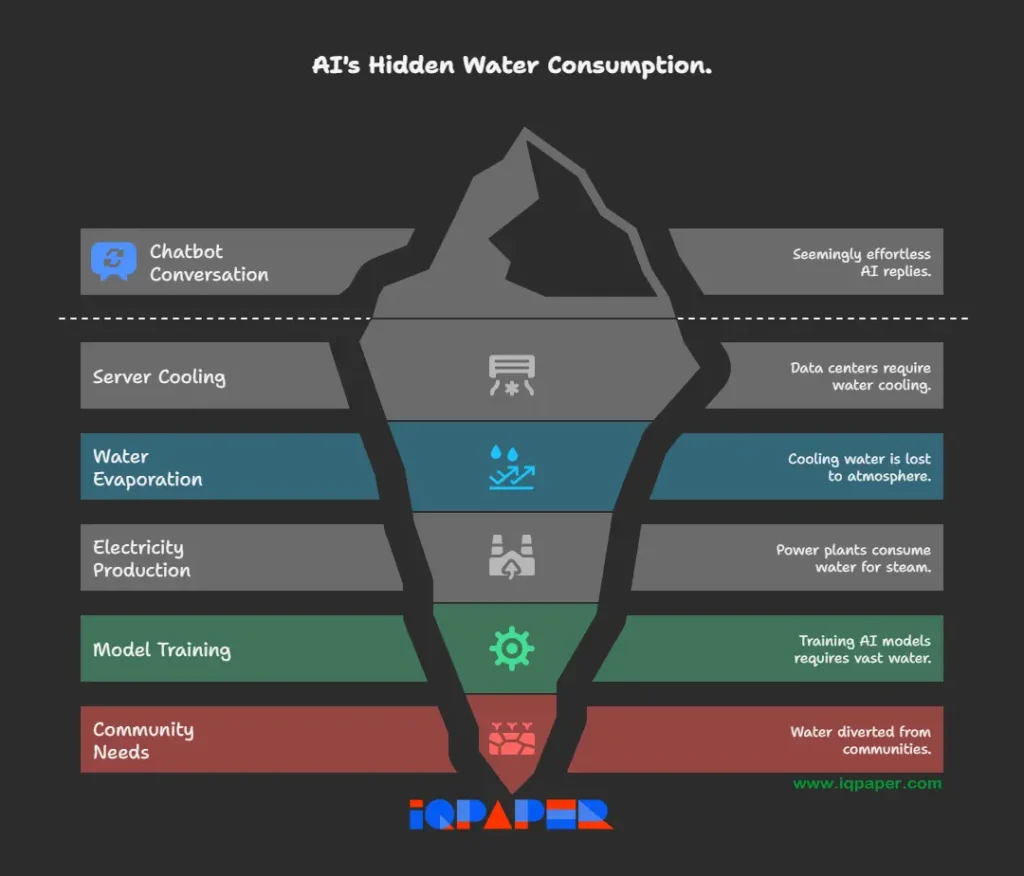

Artificial intelligence (AI) has rapidly become a daily companion. Every time you type a question into ChatGPT or another AI assistant, the reply seems effortless—words appear instantly on your screen. Yet hidden behind those sentences is an unexpected cost: a sip of drinking water.

Sam Altman, the CEO of OpenAI, once described it in curious terms: about one-fifteenth of a teaspoon of water is used for each ChatGPT query. On its own, that drop feels trivial. But when multiplied across more than a billion daily prompts, those drops cascade into a river of consumption.

The Hidden Path from Prompt to Water Loss

Why does software need water at all? The answer lies not in the code, but in the machines that run it.

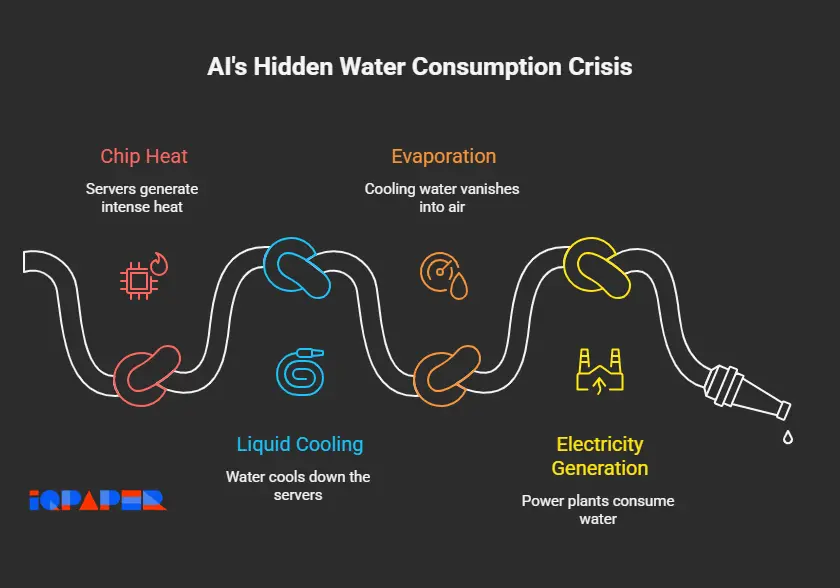

- Heat from powerful chips: Every prompt sent to AI activates powerful servers in vast data centers. These servers generate intense heat, especially during the training of large models like GPT-3. To prevent overheating, companies rely on cooling systems, many of which depend on high-quality water. Unlike industrial water, this often must be drinking-grade, free from bacteria and corrosion risks.

- Cooling the giants: To stop servers from melting down, cooling systems kick in. Air was once enough, but modern AI demands far more efficiency. Liquid cooling—often with clean, treated water—has become the norm. Water absorbs heat from servers, is sent to cooling towers.

- Evaporation: In many centers, as much as 80% of the cooling water vanishes into the air as vapor, never to be recovered. This means that vast amounts of water are effectively lost to the atmosphere.

Add in the fact that electricity itself is thirsty—coal, gas, and nuclear plants consume water to produce steam power—and suddenly a “simple chat” feels far less weightless.

Measuring the Invisible

Here’s where the debate heats up. Altman’s “teaspoon math” is a neat soundbite, but independent researchers argue the true cost is higher. Some analyses suggest that answering 10 to 50 questions may drain half a liter of water—the equivalent of a standard water bottle.

And that’s just usage. Training a massive model like GPT-3 reportedly required hundreds of thousands of liters during its build phase alone.

Tension at the Tap

These figures might feel abstract until they collide with local realities. Communities in Spain, India, Chile, Uruguay, and U.S. states have all raised concerns, noting that the same fresh water being diverted into AI systems is also needed for crops, households, and daily hygiene.

In areas already battling scarcity, the expansion of AI data centers can feel like an unwelcome guest at the table—competing for the last glass of water.

Searching for Smarter Solutions

Big technology firms know the optics and the urgency. Google, Microsoft, and Meta have all promised to become “water positive” by 2030, pledging to return more water than they consume.

Meanwhile, engineers are experimenting with:

- Closed-loop cooling that minimizes evaporation.

- Recycling server heat to warm nearby homes.

- Unconventional sites for future data centers—from the depths of the ocean to the cold edges of the Arctic.

Whether these measures can keep pace with AI’s rapid growth remains to be seen.

A Glass Half Full—or Half Empty?

Artificial intelligence is reshaping how we work, learn, and create. But it also reminds us that no digital miracle comes free of physical limits. Water, the most basic of resources, is now part of the cost of every chatbot conversation.

The challenge is not to abandon the technology, but to design it responsibly—so that innovation doesn’t quietly dry up the wells we all depend on.

Leverage AI-driven solutions to optimize water usage and conserve our limited resources.

Understanding player psychology is key – recognizing patterns

Absolutely. Once you understand the psychology behind player behavior, you can start spotting patterns that aren’t obvious on the surface. That insight makes it easier to anticipate decisions and adapt strategies accordingly.

It’s fascinating